How to Use ChatGPT's Parental Controls

The AI chatbot’s new guardrails, which include content filters and safety alerts, are designed to protect younger users, especially teens in emotional distress. But risks remain.

ChatGPT just released a set of optional parental controls, one step toward making the popular AI chatbot safer for its large pool of teen users. To implement the controls, parents need their own ChatGPT account, which can then be linked to a child’s via an email invitation from either user.

Once the accounts are connected, parents can limit their child’s exposure to sensitive content and turn off other features, like image generation and ChatGPT’s ability to recall past conversations. Parents will also receive safety alerts if their child exhibits warning signs of self-harm, according to OpenAI, the company behind the chatbot.

But even with the changes, some experts say ChatGPT and other AI platforms remain risky for children and teens.

About a quarter of U.S. teens now use ChatGPT to help with schoolwork, according to the latest Pew Research data. And according to separate survey data from a recent Common Sense Media report, about 1 in 3 teens between 13 and 17 say they’ve relied on an AI companion for social interaction and relationships.

ChatGPT’s new safeguards arrive after high-profile reports of real-life harms caused by the AI chatbot. Some young users have developed unhealthy attachments to the tool, and others have allegedly received dangerous advice during a mental health crisis.

Earlier this year, 16-year-old Adam Raine committed suicide after months’ worth of ChatGPT conversations in which he discussed his mental health struggles. The tool repeatedly directed Raine to support resources such as the national suicide hotline (available by dialing 988), according to reporting in The New York Times. But on other occasions, it responded in dangerous ways to statements that he was considering harming himself, according to a lawsuit (PDF) filed by his parents. Ultimately, the suit alleges, the tool helped lead him down a path of "maladaptive thoughts" and isolation, contributing to his death.

"An AI companion is designed to create a relationship with the user, and our online experiences are designed to be positive," says Susan Gonzales, founder of AIandYou, a nonprofit dedicated to improved AI literacy. "It’s very enticing for someone who may be feeling lonely or depressed or bored to turn to this AI companion to feed them what they think they need."

In an emailed statement, OpenAI said, “Teen well-being is a top priority for us—minors deserve strong protections, especially in sensitive moments." The company cited measures it takes, such as "surfacing crisis hotlines, guiding how our models respond to sensitive requests, and nudging for breaks during long sessions."

The company has publicly acknowledged that ChatGPT’s programmed safeguards can degrade over long conversations, and says it’s working to strengthen them.

The Burden on Parents

Some parents would still prefer their kids to stop using AI entirely.

Emily Cherkin, a Seattle parent of two teens, publicly advocates for a far more intentional use of technology in classrooms and views AI tools like ChatGPT as addictive and an impediment to critical skills development, from problem-solving to building relationships. But it’s becoming harder to avoid, she says, as textbooks, testing, and homework assignments become digitized within online-learning platforms and AI tools become embedded in devices provided to students by their schools.

Connect to Your Teen’s ChatGPT Account

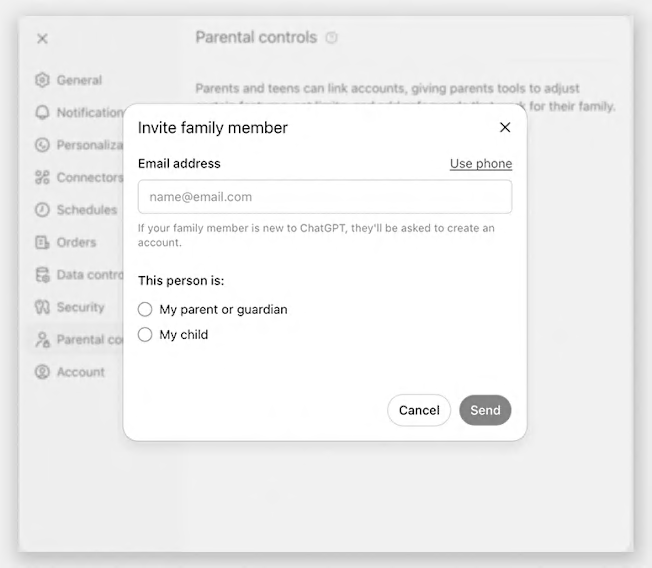

To set up parental controls, you first need to link your own ChatGPT account to your teen’s. (If you don’t have an account, it’s free to set one up from inside the ChatGPT app or your browser.)

Source: ChatGPT Source: ChatGPT

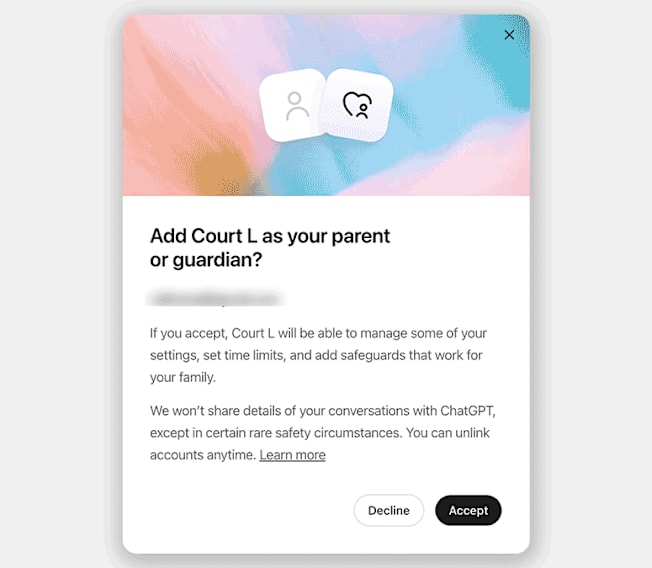

To link to your child’s ChatGPT account: Click on the round profile icon in the bottom left of your own ChatGPT account. Go to Settings > Parental controls > Add family member. Then enter your child’s email address and select "My child." This sends an email invitation to link accounts, which your child must accept. (Your child can also send you the invitation via the same menus; they’ll instead select "My parent or guardian.") Once the request is accepted, you’ll see the child’s account listed in the "Parental controls" menu, and you can turn certain features on or off (details below).

Note: Linking accounts doesn’t grant you access to your child’s ChatGPT conversation history, unless your child says something that triggers a safety notification.

Source: ChatGPT Source: ChatGPT

Reduce Sensitive Content

Once your teen’s account is linked, ChatGPT should automatically reduce the account’s exposure to sensitive content, which includes “graphic content, viral challenges, sexual, romantic or violent roleplay, and extreme beauty ideals," according to OpenAI.

But ChatGPT may still be capable of engaging in harmful conversations with your teen, Torney says. AI chatbots are designed to encourage continued engagement by being helpful and validating, which can occur even if a user is displaying dangerous views or is in an altered state of mind. Chatbots also speak naturally and authoritatively, with responses tailored to the user, which can build trust and increase the potential for developing emotional dependence.

"If a teen has a distorted view of reality or is asking a chatbot for that 900-calorie diet, the chatbot might say, ‘Hey, that’s right,’ or ‘Hey, I believe you,’" Torney says. “They’re not designed to behave the way that a human would. We’ve seen this lead to very tragic outcomes with real teens getting harmed.”

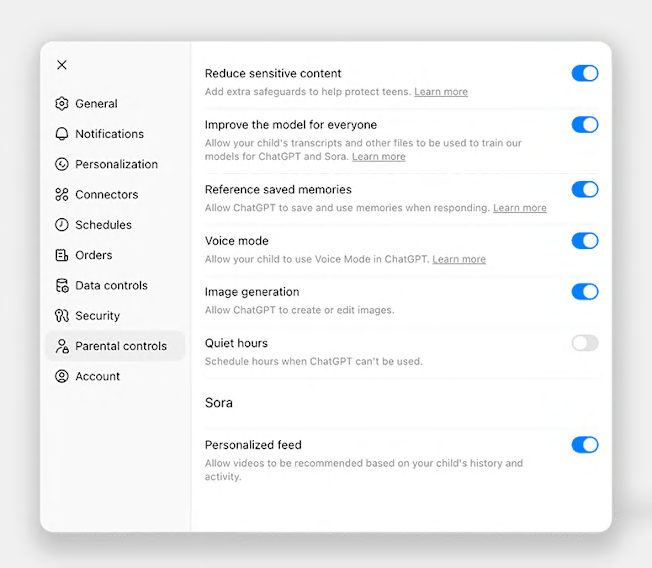

To double-check the "sensitive content" setting: Go to Settings > Parental controls, and make sure that "Reduce sensitive content" is toggled on at the top. (It should be turned on by default once you link accounts.)

Source: ChatGPT Source: ChatGPT

Set Up Safety Notifications

ChatGPT will now send you an alert if your teen displays warning signs of self-harm, according to OpenAI. Safety notifications are automatically turned on once you link accounts, but you can change where and how you receive them (details below).

ChatGPT’s systems will escalate content that displays signs of acute distress for review by a "small team of specially trained people," OpenAI says, and parents can expect to receive a notification within hours.

There’s room for additional measures, Torney says, such as blocking younger users from engaging in emotional support conversations altogether or improving age-verification measures. "We’re simultaneously saying: Yes, this is a good step—and there’s a lot more that we can be doing in this space to keep teens safe on these platforms."

Currently, people are supposed to be at least 13 years old to sign up for ChatGPT, and to receive a parent’s permission if they are younger than 18, but there are no verification measures beyond new users entering their own birthdate. OpenAI says its chatbots will eventually be able to detect that a user is under 18, and automatically direct them "to a ChatGPT experience with age-appropriate policies, including blocking graphic sexual content and, in rare cases of acute distress, potentially involving law enforcement to ensure safety."

To modify safety alerts: Go to Settings > Parental controls. Under Safety notification, select Manage notifications. You can turn on push notifications, email alerts, and SMS texts. Within the same notifications management menu, you can also choose to get notified when ChatGPT responds to requests from your teen that "take time, like image research or image generation."

Turn Off Image Generation

With a brief text prompt, ChatGPT can generate a wide variety of images, from a brand-new graphic meant to be inserted into a school report to a doctored version of an existing photo uploaded from your teen’s phone or computer. This introduces some risk that a teen could create sexually explicit or violent content, images that reinforce racial or gendered stereotypes, or edited photos that exacerbate distorted body image issues, and more.

To block this feature: Head to Settings > Parental controls, and toggle off "Image generation."

Set Quiet Hours

Parents might prefer their teen to use ChatGPT only during certain hours, such as when working on homework after school. Parents can now set timed limits on usage for the linked account.

To turn on quiet hours: Head to Settings > Parental controls, and toggle on "Quiet hours." Then designate when quiet hours begin and end.

Decide Whether to Turn Off Chat History

ChatGPT keeps a record of a logged-in user’s chat history—available on the left-hand rail when viewed in a browser—which allows the bot to pick up conversations where they left off, and respond more personally to new prompts by the user. How this saved memory is handled is one of the most complicated issues affecting ChatGPT’s safety and usefulness, Torney says.

Saved memory can elevate the risk that a user develops a false sense of intimacy, trust, or attachment with a chatbot, he says, or relies on dangerous advice from ChatGPT during a mental health crisis.

The new ChatGPT parental controls allow parents to turn this feature off, but Common Sense Media suggests that parents leave it on, for multiple reasons. For one, preserving memory makes ChatGPT more useful as an educational tool—when a teen is, say, studying for an exam over multiple sessions or building knowledge across a semester. And two: Saved memory may help ChatGPT better identify patterns of mental health distress across multiple conversations and notify parents. “This is a complicated area, and reasonable parents might come to their own conclusions about why they might want to have memory off,” Torney says.

To turn off chat history: Head to Settings > Parental controls, and toggle off "Reference saved memories."

Opt Out of Model Training

ChatGPT collects conversational data from its users to further train its models. (This function is separate from ChatGPT’s memory function, detailed above, which allows ChatGPT to remember information shared across a single user’s conversations.)

Although OpenAI states that it uses this data only to improve the platform, rather than for advertising and marketing, you can turn this feature off—for both your child’s account and your own—if you’re concerned about privacy and data collection.

To opt out of model training: Head to Settings > Parental controls, and toggle off "Improve the model for everyone." You can turn off this feature in your own account by going to Settings > Data controls, and deselecting the same "Improve the model for everyone."