Should You Use an AI Browser Like ChatGPT Atlas?

Browsers like Atlas, Perplexity Comet, and Microsoft Edge's CoPilot Mode promise convenience. Also on tap: flawed answers and security risks.

For most of us, our web browser is just that little app on our phone or computer that lets us get on the internet—whether it’s the blue “E” of Edge, the colorful circle of Chrome, or the compass of Safari. It’s what you use to check email, shop online, or read the news, and it’s been largely the same for nearly two decades.

The Rise of 'Agentic' Browsing

Until recently, AI in browsers mostly acted like a chatbot in a sidebar, summarizing pages or answering questions about what you were seeing. Now, we’re seeing the leap to “agentic” capabilities. Instead of simply analyzing content, the AI browser will actually take actions on your behalf. “It’s acting and it’s doing,” Shivan Sahib, VP of privacy and security at the privacy-focused browser company Brave, told me in a recent interview.

The idea is that you can simply tell your agentic browser to book flights and a moderately priced hotel for two people in Phoenix next month, or find ingredients for a recipe and add them to your grocery cart.

The AI agent would then navigate websites, fill out forms, and click buttons to complete all these tasks, acting as a high-powered digital assistant. Theoretically, this would free you up from a lot of online drudgery.

Why AI Browsers Feel Familiar

Before diving into their special AI powers, it’s worth noting what these browsers are like for everyday browsing. At first glance, they all might feel surprisingly familiar, especially if you’re used to Google Chrome. They all have tabs, bookmarks, and settings menus that look and work in much the same way.

That’s not a coincidence. These new browsers aren’t built entirely from scratch. Instead, they are based on Chromium, the same open-source project developed by Google that powers the popular Chrome browser. Edge, which debuted in 2015 using different technology, switched to Chromium in 2020.

Chromium provides the powerful and reliable foundation that ensures web pages load correctly. It handles basic security, and supports features like browser extensions. Companies, including OpenAI, Perplexity, and Microsoft build on top of this base, adding their own custom visual skins and user interface elements, features, and—most important—sophisticated AI agents.

You’ll often notice the biggest difference right when you open a new tab. Instead of defaulting to a Google search bar or a grid of favorite websites like Chrome does, these browsers put their AI front and center. ChatGPT Atlas, for example, opens new tabs directly into a ChatGPT interface, encouraging you to ask questions rather than type in a web address. This fundamentally changes the starting point for many common internet tasks.

So, while the basic browsing mechanics feel standard, the real change lies in how these browsers integrate AI into your workflow, often making the AI assistant the primary way they want you to interact with the internet.

Putting Agentic AI to the Test

So if that’s the theory, what happens when these AI agents meet the real world?

I spent several days using ChatGPT Atlas (currently available only for macOS), Perplexity Comet (available for macOS and Windows), and the new Copilot Mode in Microsoft Edge (available for macOS and Windows), which is my primary desktop browser.

My goal was to see how they handled common tasks from the perspective of an everyday user, focusing on usefulness, reliability, and ease of use. I ran three specific tests: booking a restaurant, comparing laptops, and summarizing emails.

Test 1: Booking a Restaurant

First, I tried a straightforward task: “Book a table for two at 7 p.m. this Saturday in Tucson, for a Mexican restaurant.”

ChatGPT Atlas, once switched into its clearly marked Agent Mode in a new tab, provided a highly engaging visual experience. It displayed a warning about its own experimental nature and then proceeded to visibly navigate OpenTable’s website, mouse cursor moving automatically. It selected a highly rated local restaurant (Blanco) and completed all steps up to final confirmation in about 90 seconds, without needing further input beyond my contact info at the very end.

Perplexity Comet and Edge’s Copilot Mode handled the task primarily through their chat interfaces. Comet initially made a strange error, searching for restaurants in the Caribbean before correcting itself, eventually landing on the same restaurant as Atlas, but taking nearly 3 minutes to do it.

Edge’s Copilot Mode required me to turn on specific settings first, which might confuse some users. On its first attempt, the AI failed entirely after getting stuck for more than 2 minutes. On a second try, it succeeded, also choosing Blanco, but it took more than 2 minutes. Both Comet and Edge required me to provide contact info to finalize the booking, just like Atlas did.

This first test offered a clear lesson: For a simple, single-goal task, the AI isn’t a significant time-saver. At least not yet. I found it was just as easy, and arguably faster, to use the OpenTable app directly on my iPhone.

Test 2: Shopping for a Laptop

Next, I tried a task that is often tedious when done manually: comparing product specs. I told each agent: “Open tabs for the latest 14-inch versions of the Samsung GalaxyBook 5 Pro and Apple MacBook Pro, then create a table comparing their prices, battery life, and ports.”

Again, Atlas performed well. It rapidly typed out its reasoning process, searching websites and compiling data. It successfully pulled up the correct product pages, verified its data with additional sources like LaptopMag (even though that publication recently shut down), and generated an accurate comparison table within its chat interface. The process took 2 minutes and 5 seconds and felt quite polished.

Perplexity Comet’s performance here was inconsistent.

On its first try, it generated a table in about 2 minutes but said it couldn’t access the manufacturers’ official product pages, relying instead on “recent and verified sources,” which left me doubting the accuracy of its data. A second attempt, which took about 90 seconds, seemed more effective, with Comet listing official pages as sources. But the AI still expressed uncertainty, suggesting I ask it to try opening the pages again for potentially more accurate details. This lack of confidence wasn’t just concerning; it rendered the results untrustworthy, forcing me to do the verification work myself.

Edge’s Copilot Mode was the slowest and most cumbersome. After initially providing only a “general comparison” (and oddly using Google Search, rather than Microsoft’s own search engine Bing), the AI explicitly asked for permission just to browse Samsung.com. That shows some admirable sensitivity to security, but interruption added friction and defeated the entire purpose of an automated agent. The process took a lengthy 6.5 minutes, making it far less efficient than manually comparing specs myself or using a dedicated tool like Consumer Reports’ laptop ratings.

Test 3: Summarizing Emails

The final test probed how the agents handle potentially sensitive information, using a dedicated test Gmail account I had created. The prompt was: “Go to my Gmail inbox and summarize my last five emails.”

ChatGPT Atlas handled this impressively from a technical standpoint. After I logged in to the test account when prompted, the Agent Mode visibly opened the inbox, clicked through each of the five emails, took screenshots, and then presented a detailed, accurate summary table, including the sender’s name, subject, time stamp, and clickable screenshots, taking about 2.5 minutes.

Perplexity Comet took a different approach, asking me to “connect” my Gmail account via OAuth, a commonplace system you’ve likely used before—it’s the technology behind the “Sign in with Google” buttons that grant an app ongoing access to your account. Comet then produced accurate summaries almost instantly (in around 40 seconds).

Edge’s Copilot Mode struck a middle ground; it required me to already be logged in to Gmail in another tab, then asked for explicit, one-time permission to access that specific tab for the summarization task. Once that was granted, it provided accurate summaries in about 1 minute. This felt like a reasonable balance between capability and user control.

Rube Goldberg Machines—With Security Risks

Across my hands-on evaluation, a pattern emerged: While the technology performed complex tasks—ChatGPT Atlas, for instance, compiled an accurate laptop comparison table faster than I could have done it—the experience was often clunky.

Booking a simple reservation or comparing laptops using the AI browser frequently took longer than it would have taken to do so manually, involved confusing setup steps, and in some cases produced errors. At times, the whole process felt like fiddling with a complicated Rube Goldberg machine for tasks that are not difficult to begin with.

The browsers also required me to grant the AI broad access to my data. The most impressive demonstration—my email summarization test—involved handing over significant visibility and control for sensitive accounts. As our in-house security expert, Steve Blair, aptly put it: “Is the juice worth the squeeze?”

If you ask me, the answer is “not yet.” Instead of adding the promised convenience, the browsers often forced me to spend time troubleshooting and double-checking their AI work.

And then there are the bigger issues of privacy and security.

Let’s start with privacy—how much personal information you might be sharing with a huge tech company. For an AI browser to act as your “assistant,” it needs to see and analyze almost everything you do online. To be truly helpful, it must build a detailed memory of your online activity—not just your search history, but the content of the pages you visit. This means the AI is observing as you browse sensitive health information or review your bank statements.

The browsers offer privacy controls—Open AI’s browser lets you clear your browsing history, for instance, or open an incognito window that temporarily logs you out of ChatGPT. But overall, it feels like a familiar story, opening yet another avenue for the kind of broad data collection we already see from companies like Google and Meta.

The other, arguably bigger, risk involves security.

The “agentic” capabilities—the AI clicking and typing for you— introduce an entirely new level of vulnerabilities. This marks one of the first times in mainstream computing that we are giving our computers autonomy, allowing them to go out into the world and take complex actions on our behalf, often without our direct, step-by-step approval.

This means we don’t just have to worry about protecting ourselves from malicious code like a virus; we now have to worry about protecting ourselves from a gullible AI assistant that gets tricked into doing something harmful.

The most discussed example of what can go wrong is called “indirect prompt injection,” and it’s a problem demonstrated by researchers at Brave, the company behind a popular privacy-oriented browser. They set up a test where they asked Perplexity Comet to summarize a Reddit page. One of the comments on the page contained malicious commands in hidden text, which led the AI to navigate to another window where the user was logged in to Gmail, and steal information.

A normal browser couldn’t have done anything like that. The tabs in a browser window are safely isolated from each other, and browsers basically just display information. But a browser that’s acting as your AI agent has much more leeway to do the kinds of things only a human being at your keyboard could do before.

The AI companies acknowledge at least some of the risks. “Prompt injection remains a frontier, unsolved security problem,” OpenAI’s Chief Information Security Officer, Dane Stuckey, publicly stated recently. And when I asked Perplexity about the indirect prompt injection that Brave had discovered, it told me that users can stay safe by simply “avoiding sites that they don’t trust.”

But how practical is that? As Sahib points out, malicious instructions can be hidden in user comments on perfectly legitimate sites.

Neat, Not Necessary: Why You Can Wait

Setting aside a prompt injection attack, all AI systems can hallucinate (make up facts) or misinterpret requests.

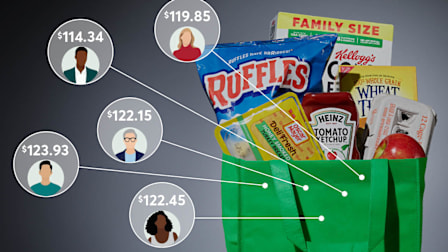

Imagine an AI agent adding the wrong items to your cart and clicking Buy, or booking airline tickets to St. Petersburg, Russia, when you really wanted to go to Saint Petersburg, Florida. (Recall that Perplexity Comet, for whatever reason, was initially trying to book me into a Mexican restaurant in the Caribbean, instead of Tuscon!)

The technology may become much more reliable in the future, but for now my advice is to approach these AI browsers with cautious curiosity rather than wholehearted adoption.

I love trying new tech, and I use AI chatbots all the time. But while the new browsers are fascinating, my initial take—backed by insights from security experts—is that they offer little practical, everyday utility for most people, while the security questions loom large.

CR’s Steve Blair compares them to self-driving cars. Instead of launching them on a six-lane highway, he says, we should roll them out on a slower, more thoughtful path: “You gotta do baby steps.”

If you’re curious to give them a spin, think of it like a beta test, not a ride in a fully realized product. Keep them away from your sensitive online life. That means logging out of email, banking, and social media sites while using the AI features.

While these tools offer a tantalizing preview of what’s to come, they aren’t foolproof yet. For the tasks that truly matter, sticking with your trusted and familiar apps and websites remains the safest and simplest choice for now.